In this tutorial we will build a Docker image and learn about some of the mechanics behind the multi-stage build process.

The experience gained from this tutorial will greatly help with the following article on building a .Net Core Application in Docker, which takes full advantage of the sometimes confusing optimisations of multi-stage builds.

If you’ve read this article before, jump straight to the Sloth Summary for helpful commands!

What is a Docker Image?

In the getting started with Docker series introduction we likened the process of Docker running images to double clicking a .exe on a Windows machine. If this vague statement left you wanting, you’ve come to the right place!

While getting started, let’s think of a docker image as a set of instructions for creating a Docker container. In the Docker workflow, we build a Dockerfile into an image and then run the image to create a container.

If we think of the Docker engineering workflow in .Net terms, the image would represent a class definition and the container would be an instance of that class during runtime. This means that much like .Net classes, Docker images can be extended, shared and reused by us or even the Docker Daemon.

Creating a Dockerfile

In order to see how a multi-stage build works we will incrementally build the stages of a Dockerfile. After each build we will observe the filesystem to see what has been produced.

Start by creating a new folder on your computer. This tutorial is based in a folder called tutorial and will be referenced moving forward. Next, create a new file in this folder called Dockerfile without an extension.

Using your text editor of choice, copy the snippet below into the Dockerfile that you just created.

FROM alpine:latest as Stage1 WORKDIR BaseDirectory RUN mkdir Folder1 RUN touch Stage1TestFile.txt # FROM alpine:latest as Stage2 # WORKDIR BaseDirectory # RUN mkdir Folder2 # RUN touch Stage2TestFile.txt

Let’s take a look at what this is doing:

FROM alpine:latest as Stage1

The FROM command will pull the latest version of the Alpine Linux image from Docker Hub when our image is built. This image is a light weight Linux Operating system (less than 5MB in size) that will allow us to make folders and files within our container.

The image that we are creating will build upon this as our base image; the extensibility of Docker images in action.

WORKDIR BaseDirectory

The WORKDIR command sets a working directory for each command that follows it. Think of this as using cd in PowerShell to change into a known directory, creating it if it does not already exist.

For this tutorial the command will create a BaseDirectory folder in the root of the container. While the WORKDIR command is relative to the root of the Docker container when first used, subsequent calls are relative to the last WORKDIR.

RUN mkdir Folder1

The RUN command will, as the name implies, run a command. It will use a pre-defined shell to execute this command based on the operating system; sh on Linux and cmd on Windows.

mkdir is a Linux command that will create a folder. In Stage1 we use this command to create a folder called Folder1 in the working directory.

touch is a Linux command that will create a file. In stage1 we use this command to create a file called Stage1TestFile.txt in the working directory.

It should look something like this.

BaseDirectory/ ├─ Stage1TestFile.txt ├─ Folder1/

A nice simple structure!

# From alpine:latest as Stage2

Finally, any line starting with a # is commented out and will be ignored when building the image. We will uncomment these lines after inspecting the results of Stage1’s build.

Building the Dockerfile

Now that we have defined our first iteration of the Dockerfile we can build it. Start by opening a PowerShell terminal, navigate to the tutorial folder that you created and run the following command.

docker build -t tutorial-image .

Let’s explore this command:

- Docker build tells docker that we want to build an image (duh!)

- -t tutorial-image tags the built image, so that we can reference it by a readable name, instead of its auto-generated id

- . tells docker to send all of the files from the current folder to the Docker Daemon. This is picks up the default-named Dockerfile to build it.

After finishing this tutorial it might be worth looking into the details of the build command here. You’ll be able to learn how to use custom named docker files, build arguments and more.

Run the following command to view the created image.

docker image ls

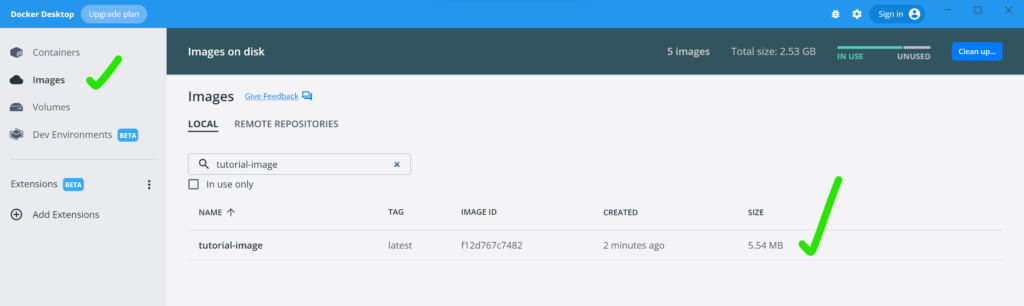

You should see a similar output to the below.

REPOSITORY TAG IMAGE ID CREATED SIZE tutorial-image latest f12d767c7482 36 minutes ago 5.54MB

You can also view the image in the Docker Dashboard.

Running the Image and Exploring the Container

Run the following command to tell Docker to create and run a new container using our image.

docker run --name tutorial-container -it --rm tutorial-image

Let’s explore this command:

- Docker run tells Docker that we want to create and start a container from an image

- –name tutorial-container names the container

tutorial-container - –it allocates a tele-type, which will cause the container to sit and listen for input from our PowerShell terminal. Without this our container would immediately exit, as it doesn’t do anything

- –rm will kill and remove the container once we are finished with it

- tutorial-image is the name of the image that we created above. This is fetched from our local Docker image collection

At this point your terminal will be left sitting in the BaseDirectory in the Linux shell.

/BaseDirectory #

Run the following command to list the files of the current directory

ls -l

You’ll now see Stage1TestFile.txt and Folder1 that we defined in the Dockerfile.

total 4 drwxr-xr-x 2 root root 4096 Aug 10 12:05 Folder1 -rw-r--r-- 1 root root 0 Aug 10 12:05 Stage1TestFile.txt

Type exit and hit enter to return to the Powershell terminal. This will cause the container to be torn down for us automatically.

This exercise has confirmed for us that by the end of Stage1’s build we have:

- A working directory called BaseDirectory

- A folder within the working directory called Folder1

- A file within the working directory called Stage1TestFile.txt

This might not seem very significant at the moment, but keep this information fresh in mind as we move into iteration 2.

Sloth Side Quest

To keep things simple we commented out the second half of the Dockerfile. This allows us to physically see that the docker build process has nothing else to run after completing Stage1.

However, Docker allows you to specify a “stopping point” in a multi-stage build.

This means that instead of commenting out the second half of the file we could have run the following command to build an image that produces the same result as above.

PS C:\_dev\tutorial> docker build -t tutorial-image --target=Stage1 .

Setting --target=Stage1 will cause the Docker build process to stop after completing the stage called Stage1.

Stage2: Building the Whole Dockerfile

Things get a little bit confusing after we build the entire Dockerfile.

Start by removing the comments from the second half of the file.

FROM alpine:latest as Stage1 WORKDIR BaseDirectory RUN mkdir Folder1 RUN touch Stage1TestFile.txt FROM alpine:latest as Stage2 WORKDIR BaseDirectory RUN mkdir Folder2 RUN touch Stage2TestFile.txt

Stage2 is very similar to stage1, with a couple of small changes:

- Stage1 and Stage2 both start in a working directory called BaseDirectory

- Stage1 and Stage2 both create folders, however the folder for each stage has a different name

- Stage1 and Stage2 both create files, however the file for each stage has a different name

Build the Dockerfile using our previous command.

docker build -t tutorial-image .

This will overwrite our existing image by the same name, which we no longer require.

Once again run the container using our previous command.

docker run --name tutorial-container -it --rm tutorial-image

After you’re placed back into the familiar BaseDirectory, once again list the contents of the current directory.

ls -l

This time we see something different. But where did our Stage1 artefacts go?

/BaseDirectory # ls -l total 4 drwxr-xr-x 2 root root 4096 Aug 15 11:12 Folder2 -rw-r--r-- 1 root root 0 Aug 15 11:12 Stage2TestFile.txt

At this point we have the a file system that looks like this.

BaseDirectory/ ├─ Stage2TestFile.txt ├─ Folder2/

Starting FROM Scratch

We use the FROM keyword in a multi-stage build to start a new stage that extends a base image. In the example above we started both stages from the same Linux Alpine image. We only did this to keep the tutorial simple though.

In reality you can start a new stage with any base image that you want. This is because each new stage starts from scratch. Everything from the previous stage disappears.

This explains why we only saw Stage2’s folder and file after running the complete Dockerfile. Despite having the same base image and working directory in both stages, Stage2 was not a continuation of Stage1.

It’s important to understand that if you need content from a previous build stage (which is often the case) you can simply use a COPY command to describe which previous stage you’d like to copy from. This clean slate and copy methodology allows us to create the smallest possible final image by not only leaving unimportant build artefacts behind, but also allowing us to switch to the smallest possible base image.

.Net Core applications use different images in the different stages of their Docker builds. We’ll cover this in more detail in another article, but the general gist is that:

- The build stage is completed on a SDK-based image. SDK images are required for building applications, however they are quite large

- The final stage of the build starts a fresh image using a .Net Core runtime base image, which is much smaller than its SDK counterpart

At this point we understand why we lost the files from Stage1. However, starting from scratch each time must slow down the build process, right?

Building Images with the Build Cache

Multi stage builds are a Docker best practice. This is because we can use them in conjunction with the build cache to increase the performance of our builds. The latter happens without us even knowing it!

Up to this point we’ve spoken about images as a set of instructions for creating a container. In reality there’s a little more to this story:

- Images at runtime are a collection of layers

- A new layer is created during the build process whenever a Docker command causes a change to the filesystem

- Each layer represents only the differences between itself and the previous layer

Did you notice that as Docker pulled the Linux Apline image from Docker Hub, PowerShell displayed several lines with independent download progress information? These were the individual layers of the base Alpine image being fetched from the server.

This is significant, because Docker caches these layers on your local machine and leverages them while building your Dockerfile. This article covers the details of how Docker checks the cache (for example using checksums for certain commands) however it has too much information to cover in this tutorial.

If a layer is found in the cache, be it from your base image or your new image, Docker will not recreate the layer. This means that slow operations such as downloading files from the internet (in the case of retrieving our Linux Alpine base image, or running dotnet restore while building a .Net Core application) only need to be run once. Subsequent builds will use the cached image as long as the dependencies don’t change.

Running docker build for a second time during the tutorial would have produced an output similar to this.

PS C:\_dev\tutorial> docker build -t tutorial-image . [+] Building 3.2s (8/8) FINISHED => [internal] load build definition from Dockerfile 0.1s => => transferring dockerfile: 32B 0.0s => [internal] load .dockerignore 0.1s => => transferring context: 2B 0.0s => [internal] load metadata for docker.io/library/alpine:latest 2.9s => [stage2 1/4] FROM docker.io/library/alpine:latest@sha256:bc41182d7ef5ffc53a40b044e725193bc10142a1243f395ee852a8d9730fc2ad 0.0s => CACHED [stage2 2/4] WORKDIR BaseDirectory 0.0s => CACHED [stage2 3/4] RUN mkdir Folder2 0.0s => CACHED [stage2 4/4] RUN touch Stage2TestFile.txt 0.0s => exporting to image 0.0s => => exporting layers 0.0s => => writing image sha256:201512f094b060b7ba9cb25d0bb3e688087461b9dd55f57781302e4af9b1f940 0.0s => => naming to docker.io/library/tutorial-image

Each line starting with CACHED has leveraged an existing layer.

While a container is run from an image and an image consists of layers, the main difference between a container and a layer is that a container has a thin “writable” layer that sits on top of the image. All modifications to the file system that are made while a container is running happen in this thin layer and are discarded when the container is torn down, leaving the image unchanged.

Viewing Docker Image Layers

Most of the time we shouldn’t need to care about the layers that are created for an image. However, if you’re trying to optimise the size of your image it can be helpful to see the specific steps that caused its layers to be generated. Additional build stages can be subsequently inserted to your Dockerfile to reduce the overall layer count.

You are able to view the layers of an image by running the following command.

docker history <image ID or tag name>

You’ll see something similar to this.

IMAGE CREATED CREATED BY SIZE COMMENT 201512f094b0 4 days ago RUN /bin/sh -c touch Stage2TestFile.txt # bu… 0B buildkit.dockerfile.v0 <missing> 4 days ago RUN /bin/sh -c mkdir Folder2 # buildkit 0B buildkit.dockerfile.v0 <missing> 9 days ago WORKDIR /BaseDirectory 0B buildkit.dockerfile.v0 <missing> 10 days ago /bin/sh -c #(nop) CMD ["/bin/sh"] 0B <missing> 10 days ago /bin/sh -c #(nop) ADD file:2a949686d9886ac7c… 5.54MB

Our key takeaways from this command are:

- The

CREATED BYcolumn is helpful in describing the step that caused the image to be created - Our tutorial-image only contains the layers from the final build stage; we don’t see any layers created by Stage1

- The <missing> image entries are described in this article in much detail by Nigel Brown

Sloth Summary

Multi stage builds contain a lot of concepts to wrap your head around and this tutorial only just began to scratch the surface of some. Be sure to check out the additional links of this article to delve a little deeper into the concepts of building Docker images, once the tutorial has had some time to sink in.

Helpful Commands

Building a docker image.

docker build -t <image tag> .

Running a Docker image.

docker run --name <name to give to container> -it --rm <image tag or id>

Listing Docker images.

docker image ls

Filtering Docker image list by tag, as covered in this tutorial.

docker image ls --filter=reference=tutorial-image