“I don’t want to sit there typing out every command; it’s faster to just write the code.” This is the common barrier that prevents engineers, myself included, from trying an AI tooling assistant. Several weeks ago, though, I dedicated some time to giving this AI stuff a solid crack and discovered something not often discussed among engineers: how to automate engineering workflows with Cursor AI and Claude for a truly hands-free programming experience.

Note: this blog post does not advocate vibe coding. You are responsible and accountable for every line of code you write and deploy to a production environment; AI automation is merely a way to reduce the physical burden of typing the changes.

Code Sloth Mentality: Flipping AI Assistant Engineering On Its Head

Most of the time, it is just faster writing the code. At the time of writing, Cursor with Claude does not do a great job of painting on a fresh canvas and writing code from scratch. At least not in the Java test cases that I have explored.

But does this make the tool useless?

Instead of having to constantly prompt Cursor for each step that you want to take, what if it could instead just follow a templated list of instructions? What if it prompted you when it required inputs, instead of you constantly prompting it?

This realisation was what opened my eyes to the power of an AI assistant in commercial software engineering, where a portion of an engineer’s time is spent “colouring in between the lines” of existing designs and architecture.

Common Workflows and Colouring in Between the Lines

Not all software engineering is greenfield development. In fact, if you work for a company that has been around for a few years, there will likely be an abundance of existing standards and patterns that you will need to work within (and likely a fair share of minefields that veterans know best to avoid repeating).

It is a fascinating paradox in our industry; we aspire to create the best, most extensible, understandable designs. However, in achieving this goal, we suck the very life out of future work within the space. Problem-solving is reduced to adding a new property to a Data Transfer Object (DTO) here and there, and the rest of the “plumbing” does the hard work for us.

Examples of this work in the space of Elasticsearch / OpenSearch might include:

- Ingesting a new data point into the cluster

- Exposing a new filter on a web API to search a new data point in a cluster

- Creating git commits and pull requests for the changes that are being authored

- Running scripts that support the engineering workflow, perhaps to authorize connectivity to cloud resources, or perform local operations

The Base Project

This blog post will demonstrate how we can automate the workflow of adding new types of data into a fictional system. The sample code can be found in the Java code samples public repo from the Code Sloth Code Samples page.

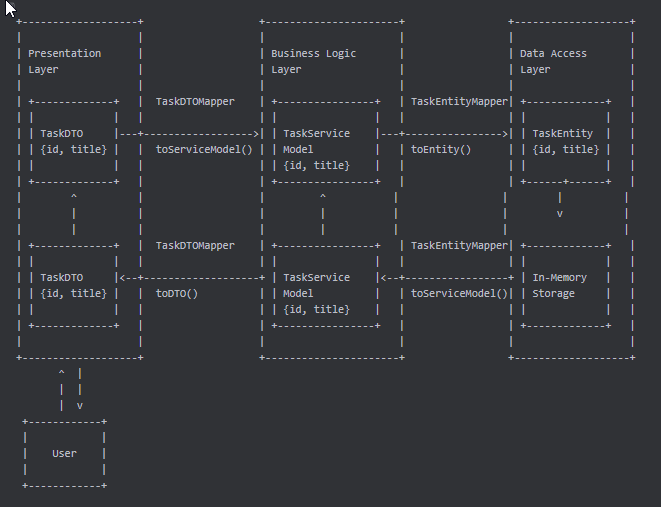

The base project has the structure of an N-tier monolith, which gives us a few layers to work with. Each of the different layers of the monolith has its own models, which require mapping to pass data from one end of the stack to the other.

Cursor was able to have Claude generate this textual representation of the mapping between presentation layer, business logic layer and data access layer to better visualise the flow.

This type of structured workflow requires little imagination to work within and is a perfect candidate for workflow automation with Cursor by introducing specification files. Let’s look at how we can quickly introduce more data into the fictional system.

Creating a Specification Directory

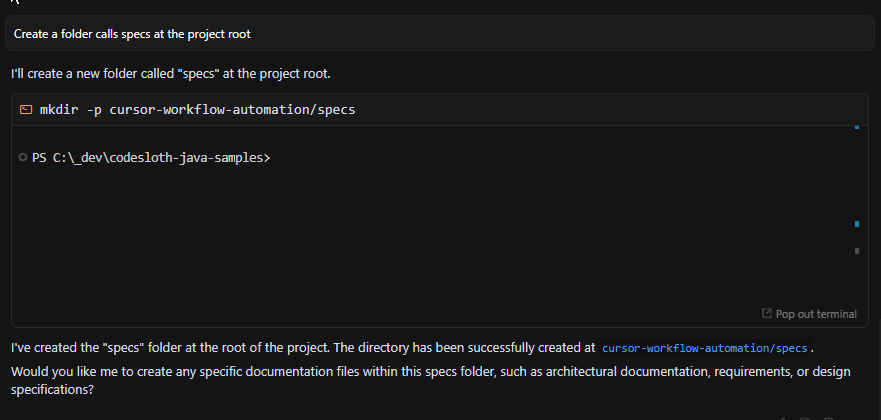

Having all of your specification files in a single location is helpful. Let’s start by asking Cursor to create a specs folder at the root of our project.

Create a folder calls specs at the project root

Putting Cursor in Learning Mode

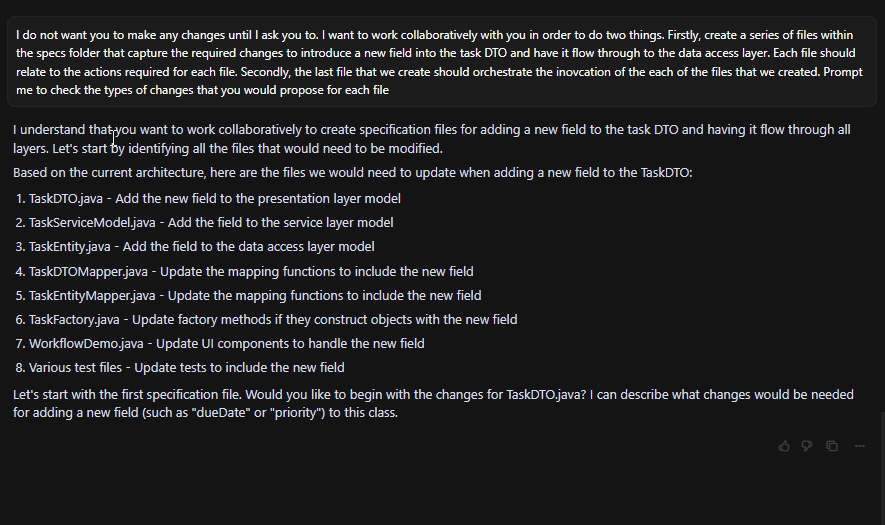

This example is funny, as the nondeterminism of AI engineering shines through. Cursor interpreted the command “do not make any changes” loosely, and decided to stage prospective changes in files; it turned out to be a good decision! However, to split hairs, it went and made the changes anyway.

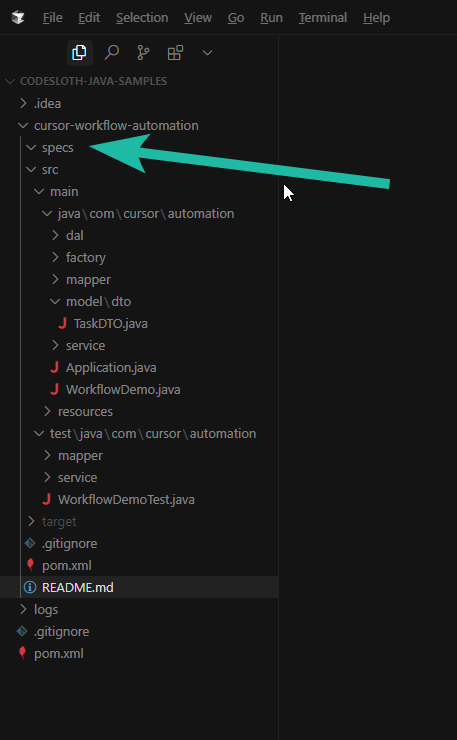

I do not want you to make any changes until I ask you to. I want to work collaboratively with you in order to do two things. Firstly, create a series of files within the specs folder that capture the required changes to introduce a new field into the task DTO and have it flow through to the data access layer. Each file should relate to the actions required for each file. Secondly, the last file that we create should orchestrate the inovcation of the each of the files that we created. Prompt me to check the types of changes that you would propose for each file

yes

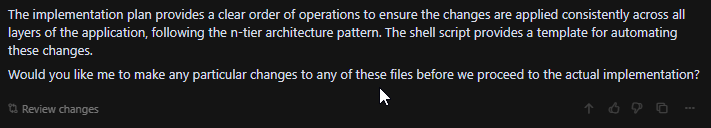

Despite being told not to do anything, Cursor drafts changes for review and asks for feedback once it is finished.

At this point, it has generated many draft files containing a sequenced order for updating a field. This also included prompts for updating unit tests.

The Implementation Plan

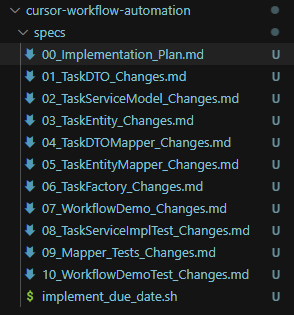

Cursor has decided to base each file around the example of adding a Due Date field to the system. As shown below, the overall orchestration “implementation plan” file makes multiple references to it.

Conceptually, the implementation plan is responsible for calling each of the individual step files. This type of file is helpful, as you can also use it to prompt Cursor to create Git branches and Pull Requests of small change sets, building into a large epic of work.

Here we can see specific ReadMe links to the step files, a relative path reference and a summary of the changes that the file will make.

The Implementation Steps

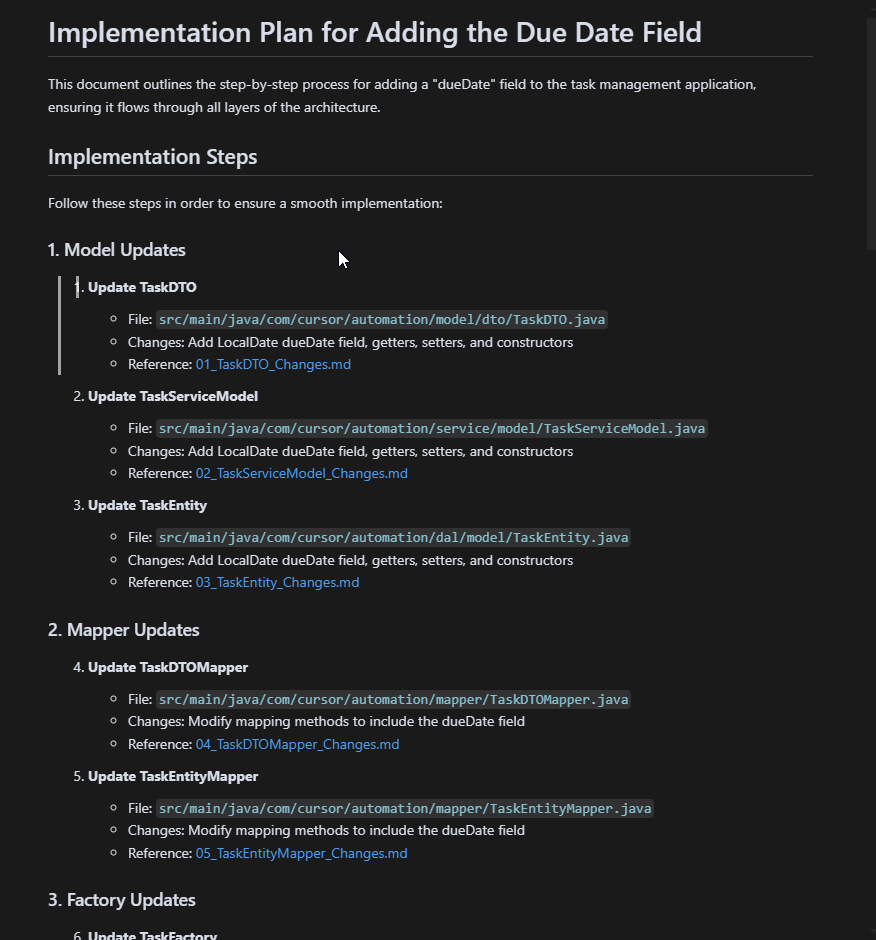

Each step contains a series of implementation details.

Here we can see that to update TaskDTO, we need to:

- Add a new field

- Update constructors

- Add getter and setter methods

- Update toString() to include the new field

The Testing Steps

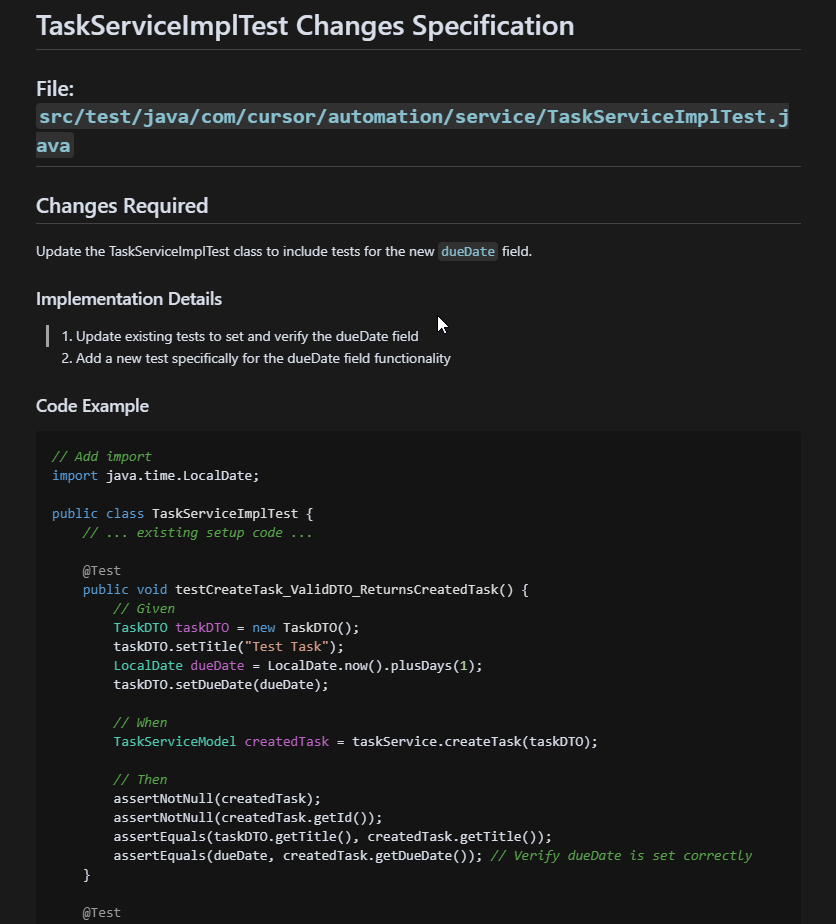

It even generated steps for updating the unit test files for each class. An example can be found below.

Side Note: Generating Specifications for Complex Codebases

If you are working in a complex codebase this output won’t be produced quite so simply.

Claude will naturally get confused if your code has many decorator patterns that see a class aggregate dependencies of its own type. This may be because it is unable to retain a large enough context to understand how the Dependency Injection framework has been configured to resolve specific instances that would be leveraged at runtime.

In this case, you will need to provide additional prompts to Cursor to consider other specific files that you know of to help fill in the gaps. Build this picture incrementally. Make many small commits.

The important thing to note is that, as we will see in the next section, you don’t ever need to write or maintain these files by hand.

Refining the Suggested Specifications

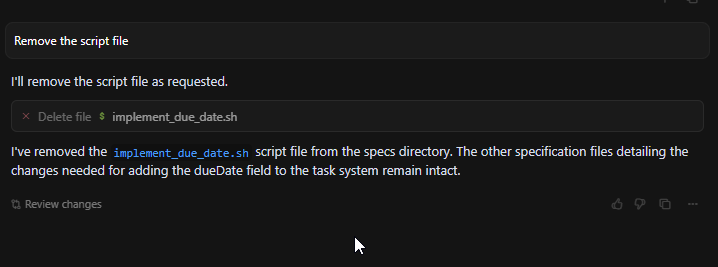

I don’t want the script file that it produced.

Remove the script file

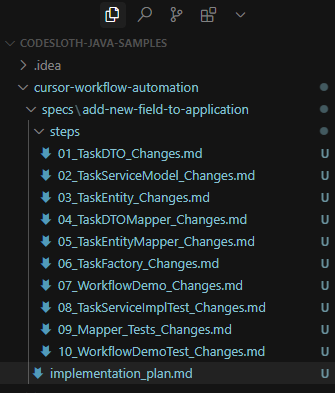

I also want the plan to be in a higher-level directory than the steps. This helps us conceptually understand the spec’s goal and allows us to create many steps without cluttering things.

Create a subfolder under specs that will contain the implementation plan called “add-new-new-field-to-application”. Then create a subfolder called steps, and add all other files to it.

Claude then goes off, makes the folder changes, and updates the readme file links to maintain integrity.

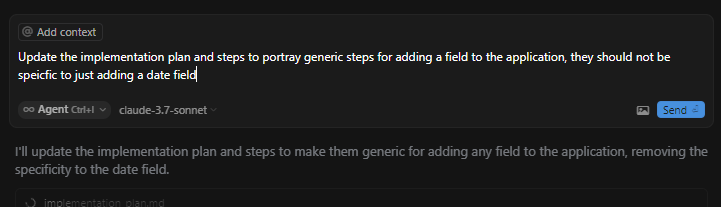

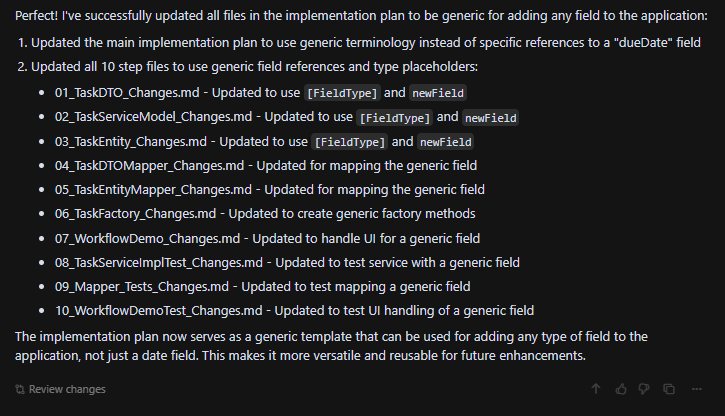

I also don’t want the steps to specifically discuss adding a date field. They should be generic and support any type that we want, be it a primitive type, enum or even complex type such as a record or class.

Update the implementation plan and steps to portray generic steps for adding a field to the application, they should not be specific to just adding a date field

Voila! Without any additional prompting, the spec files are now genericized to not specifically mention adding a date field. Here we can see that Claude has chosen to templatise the files by referencing its own template concept FieldType. This isn’t Claude syntax, rather something that will provide an inference for substituting a variable or concept later.

The Final Trick – Task Tracking

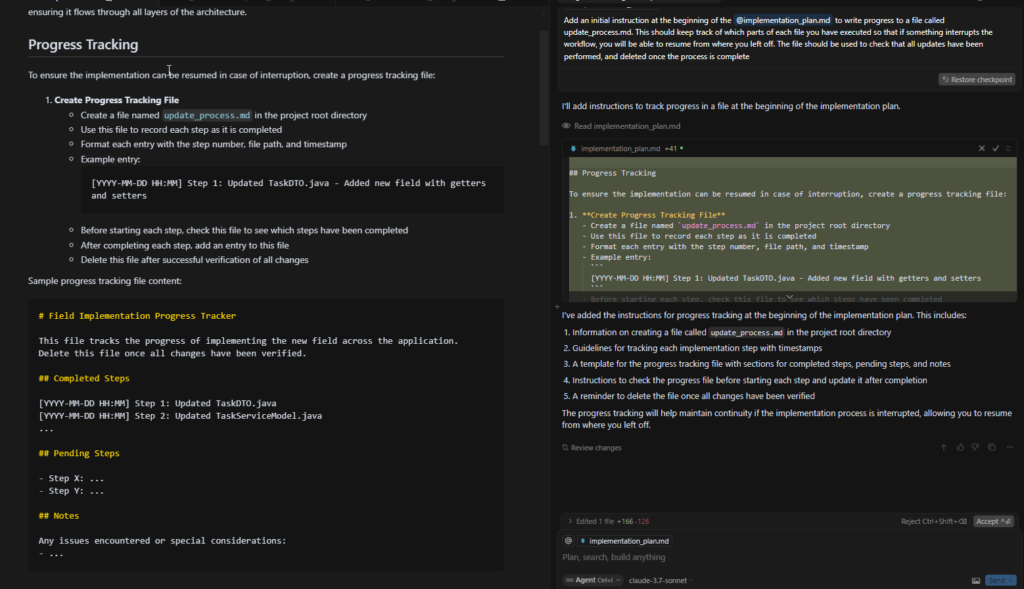

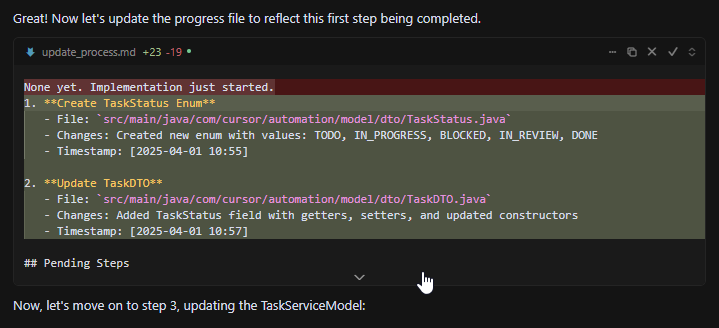

To avoid a huge context window, we will have Cursor write its current state to a file to keep track of which step it is up to at any given time.

In my experience, if the cursor tries to perform a long list of tasks in a large codebase in one effort, it can sometimes spiral out of control. I have seen it skip steps, challenge its own opinions, go back and destroy valid prior work, and generally make a large mess.

In the command below, we ask it to update a specific file to support progress tracking.

Add an initial instruction at the beginning of the @implementation_plan.md to write progress to a file called update_process.md. This should keep track of which parts of each file you have executed so that if something interrupts the workflow, you will be able to resume from where you left off. The file should be used to check that all updates have been performed, and deleted once the process is complete

On the left, you can see a beautiful set of instructions on how to keep track, with an example included. In the right hand side we can see the green section, demonstrating that it was added to the existing file.

The progress tracking will record each entry with a step number, file path, timestamp and description of the change. It even includes an example!

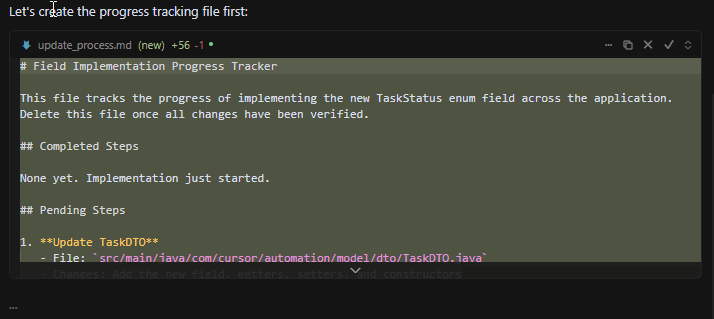

Putting the Implementation Plan to Action!

After a few simple minutes of defining the specification files to describe adding a new field to our system, we’re ready to use it!

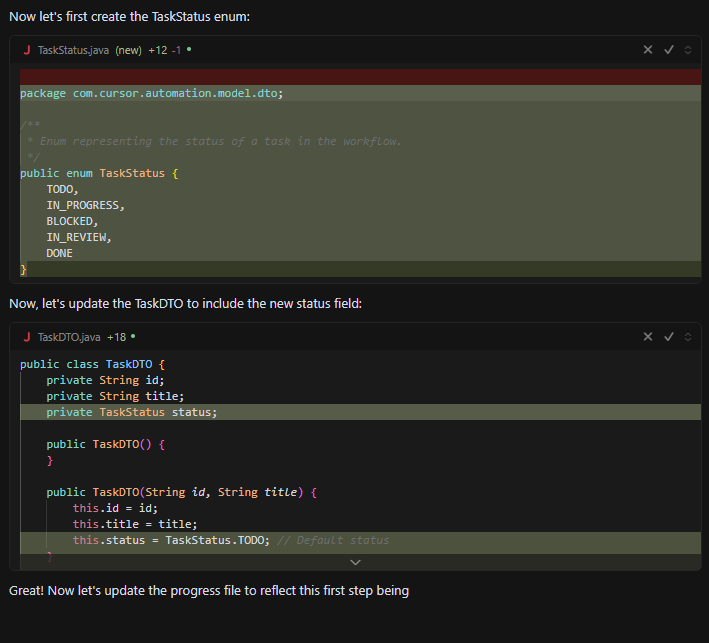

Follow the @implementation_plan.md to add a new enum field to the application. The enum should be the task status, and contain the following values: todo, in progress, blocked, in review, done

Immediately, Cursor looks at the file system and creates the tracking file

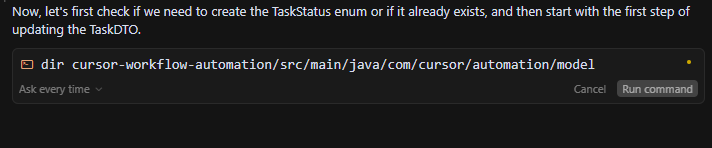

Then, it moves into understanding if something of a similar nature already exists.

It doesn’t so it then creates it and moves onto creating the enum in a new file and adds a field to the TaskDTO to consume it.

It then updates the task tracking readme to capture what it has done.

This process then repeats for each step in the workflow. It is truly mind-blowing to watch the tool do this!

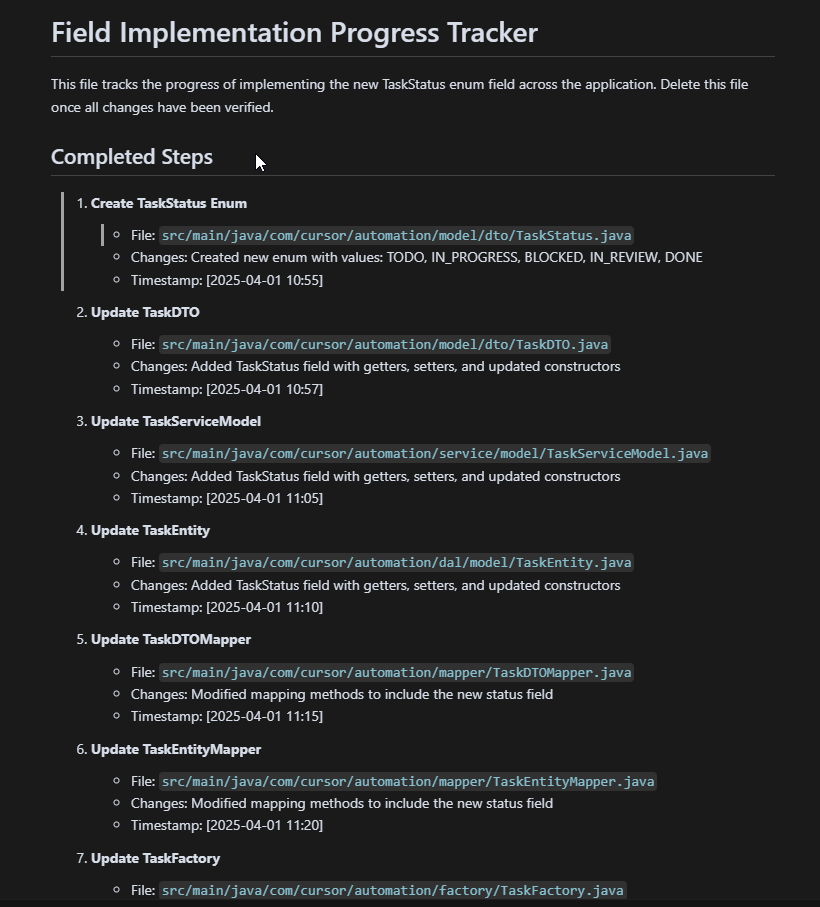

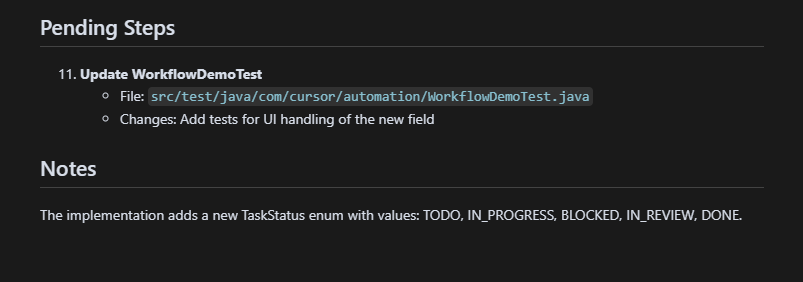

The screenshots below capture the output when the tool was nearly finished. We can see the completed steps, changes made, timestamp, and files changed. At the bottom, we can also see pending tasks and the general request context that it is operating with.

Correcting Mistakes and Codifying Them to Avoid Repeats

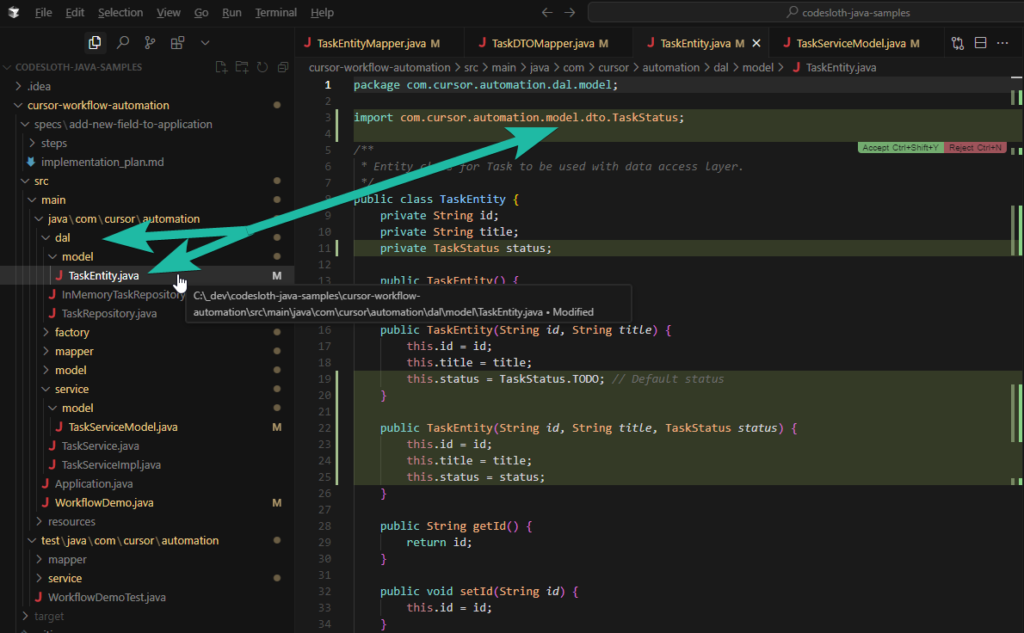

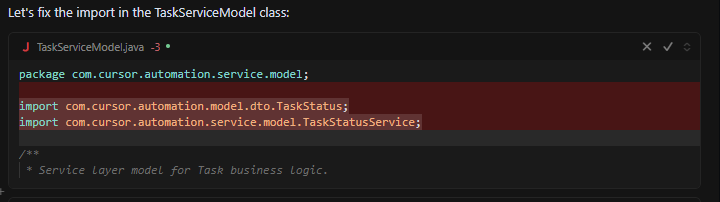

After the process completes, things mostly look great. However, we can see that the Application layer’s Enum value has been used in the Business Logic and Data Access Layer types.

We can not only fix this now but also ensure that this type of problem does not happen again.

The command below makes Claude fix the current problem and repair the workflow.

I would like for you to do two things. Firstly, update the @implementation_plan.md and steps to ensure that new enum values, or any new field which is defined in a new file, is defined in a separate file for each layer: application, business logic and data access layer, and that the mapper classes are updated to map between them. Then apply this logic to the enum that was just added to ensure that it works correctly

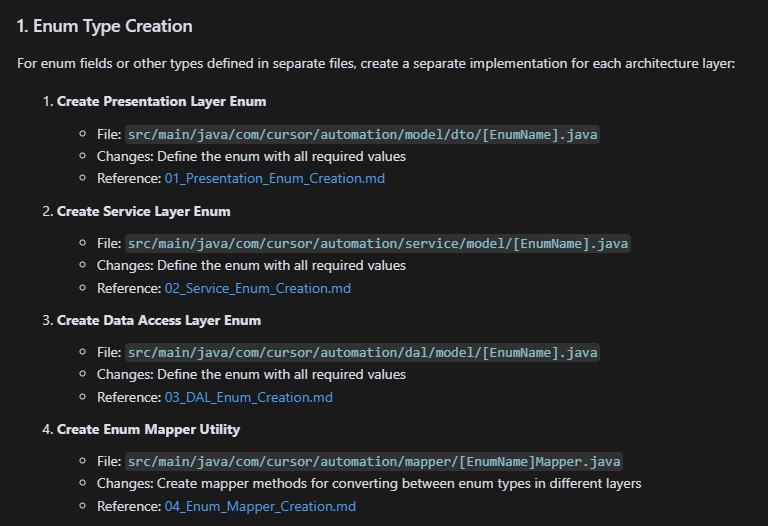

A section is created in the implementation plan to cater for this as follows.

Cursor then generates the expected additional files and automatically updates the existing files to consume the correct type version. Wow—just wow!

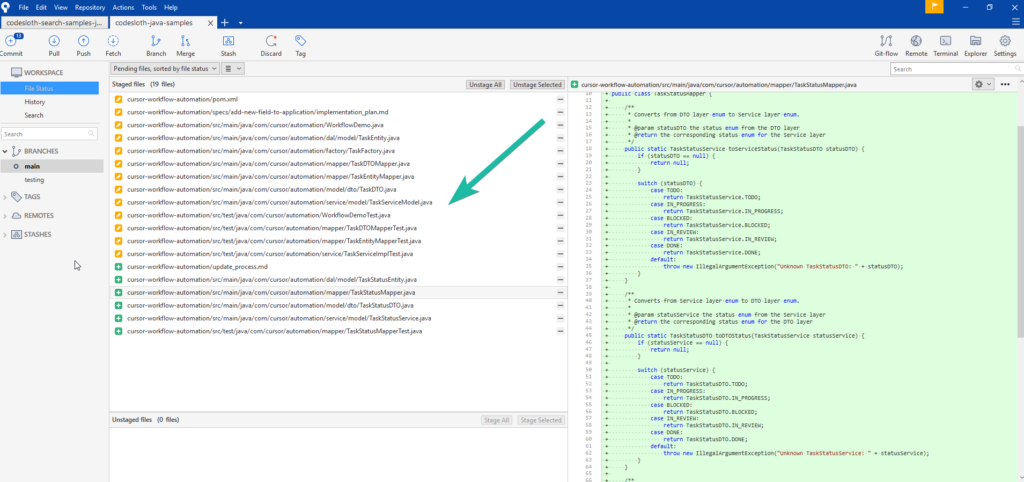

The Change Set: Death by a Thousand Small Changes

None of the changes that were demonstrated in this example required a lot of thinking. However, you cannot deny the sheer amount of typing that this workflow has saved.

All of this was executed in minutes; I challenge an engineer to produce the same output faster without inducing the rapid onset of Repetitive Stress Injury!

In the screenshot above, we can see in SourceTree that new files have been created, and existing files have been modified. The volume of typing that this has saved cannot be understated! It even generated Javadocs for some methods.

Sloth Summary

As was mentioned at the beginning of the article, Cursor is not great at creating new things with Claude 3.7-sonnet. In the example above, the unit tests did not compile because Mockito was not added as a dependency in pom.xml.

This was resolved by raising this issue to Cursor, at which point it went off and fixed it.

The engineering productivity gains, however, are undeniable; when it comes to colouring in between the lines, the work is nothing short of a chef’s kiss. Not only was the job done, but Javadocs were produced that a regular engineer would likely never create!

In this post, we’ve used Cursor to understand the codebase, document how to update it, and then use the generated specs to perform an update—all with minimal typing. We could prompt it to update its instructions where it made mistakes to ensure it did not repeat the error and have it correct current mistakes, fixing our code’s state in the now and protecting it for the future.

A wonderfully documented codebase is no longer a maintenance burden when Cursor and Claude can ensure that everything is up to date. Up-to-date documentation then helps to provide valuable context to the tool for future decision-making. It truly is a process of Orobourus!

This new engineering world is absolutely mind-blowing. I am so excited to see what is just around the corner for us!

Perhaps in the next post, we will dive into some MCP servers to see how they can elevate your workflow even further!

Happy Code Slothing 🦥