This tutorial will demonstrate how to run an ElasticSearch Docker image in containers for both single and multi-data node configurations. ElasticSearch Docker compose files will be used to orchestrate data node instances alongside a Docker ElasticSearch Kibana container to help query your data.

Read this article before? Jump to the Sloth Summary for docker-compose files to download!

Docker ElasticSearch Pre-Requisite

If you’re new to Docker, there’s no need to worry. Although this article is not a Docker tutorial, it provides all the necessary steps to set up and run your cluster.

Docker is a vast subject, and I have a comprehensive article dedicated to it. However, a prudent Code Sloth avoids getting overwhelmed by unnecessary intricacies. Therefore, click on this link to quickly navigate to the section about installing Docker Desktop, and then return to continue the tutorial.

Running an ElasticSearch Docker Compose File for Multi Data Node Cluster

Unlike in our OpenSearch Docker tutorial, there is no Elastic.Co sample docker compose file to directly consume. Therefore we are going to skip to our final one and talk about why it is shaped the way that it is.

version: '3'

services:

elasticsearch-node1:

image: docker.elastic.co/elasticsearch/elasticsearch:8.8.2

container_name: elasticsearch-node1

environment:

- cluster.name=elasticsearch-cluster

- node.name=elasticsearch-node1

- discovery.seed_hosts=elasticsearch-node1,elasticsearch-node2

- cluster.initial_master_nodes=elasticsearch-node1,elasticsearch-node2

- bootstrap.memory_lock=true # along with the memlock settings below, disables swapping

- 'ES_JAVA_OPTS=-Xms512m -Xmx512m' # minimum and maximum Java heap size, recommend setting both to 50% of system RAM

- xpack.security.enabled=false # additional configuration to disable security by default for local development

ulimits:

memlock:

soft: -1

hard: -1

nofile:

soft: 65536 # maximum number of open files for the elasticsearch user, set to at least 65536 on modern systems

hard: 65536

volumes:

- elasticsearch-data1:/usr/share/elasticsearch/data

ports:

- 9200:9200

- 9600:9600 # required for Performance Analyzer

networks:

- elasticsearch-net

elasticsearch-node2:

image: docker.elastic.co/elasticsearch/elasticsearch:8.8.2

container_name: elasticsearch-node2

environment:

- cluster.name=elasticsearch-cluster

- node.name=elasticsearch-node2

- discovery.seed_hosts=elasticsearch-node1,elasticsearch-node2

- cluster.initial_master_nodes=elasticsearch-node1,elasticsearch-node2

- bootstrap.memory_lock=true

- 'ES_JAVA_OPTS=-Xms512m -Xmx512m'

- xpack.security.enabled=false # additional configuration to disable security by default for local development

ulimits:

memlock:

soft: -1

hard: -1

nofile:

soft: 65536

hard: 65536

volumes:

- elasticsearch-data2:/usr/share/elasticsearch/data

networks:

- elasticsearch-net

kibana:

image: docker.elastic.co/kibana/kibana:8.8.2

container_name: kibana

ports:

- 5601:5601

expose:

- '5601'

environment:

- 'ES_HOSTS=["http://elasticsearch-node1:9200","http://elasticsearch-node2:9200"]'

- 'xpack.security.enabled=false' # disables security plugin

networks:

- elasticsearch-net

volumes:

elasticsearch-data1:

elasticsearch-data2:

networks:

elasticsearch-net:

Common ElasticSearch Docker Compose Errors

ElasticSearch Docker Image Virtual Memory is Too Low

Running the above multi data node Docker Compose file for ElasticSearch on Windows will surface our first error.

elasticsearch-node1 | {"@timestamp":"2023-07-11T06:06:21.871Z", "log.level":"ERROR", "message":"node validation exception\n[1] bootstrap checks failed. You must address the points described in the following [1] lines before starting Elasticsearch.\nbootstrap check failure [1] of [1]: max virtual memory areas vm.max_map_count [65530] is too low, increase to at least [262144]", "ecs.version": "1.2.0","service.name":"ES_ECS","event.dataset":"elasticsearch.server","process.thread.name":"main","log.logger":"org.elasticsearch.bootstrap.Elasticsearch","elasticsearch.node.name":"elasticsearch-node1","elasticsearch.cluster.name":"elasticsearch-cluster"}

Fortunately, the elastic.co documentation addresses this problem. We are required to increase the value of vm.max_map_count in WSL. However, the suggested script doesn’t retain the modified setting after a system reboot.

This is not ideal for our Elasticearch engineering workflow since we would need to perform the following steps each time we want to work with a cluster:

- Execute WSL command 1

- Execute WSL command 2

- Execute Docker Compose command

To simplify and streamline this process, let’s adopt a Code Sloth approach. Create a new file named RunElasticsearch.ps1 in the same directory as your Docker Compose file, and copy and paste the provided sample script into it.

wsl -d docker-desktop sh -c "sysctl -w vm.max_map_count=262144" docker-compose up

This script does the following:

- It combines all the steps recommended by elastic.co into a single step (thanks to this StackOverflow answer for explaining how to add arguments to the wsl command).

- It tells Docker to fire up the

docker-compose.ymlfile in the current directory.

From now on, whenever you want to run your Elasticearch cluster, simply right-click the file and choose “Run with PowerShell.” Alternatively, you can right-click inside the folder, select “Open Terminal,” type RunElasticsearch.ps1 and hit Enter.

Either way, you won’t have to worry about the memory issue ever again!

WSL Command Not Setting Increased Memory

If you find that the error persists after running the command above, try running wsl --update. After upgrading from Windows 10 to Windows 11, the WSL command started failing to run, and updating wsl fixed the issue.

ElasticSearch Docker Compose SSL Connectivity Issues

The next issue will pop up when you try to connect to the cluster.

elasticsearch-node2 | {"@timestamp":"2023-07-11T06:08:01.852Z", "log.level":"ERROR", "message":"node validation exception\n[1] bootstrap checks failed. You must address the points described in the following [1] lines before starting Elasticsearch.\nbootstrap check failure [1] of [1]: Transport SSL must be enabled if security is enabled. Please set [xpack.security.transport.ssl.enabled] to [true] or disable security by setting [xpack.security.enabled] to [false]", "ecs.version": "1.2.0","service.name":"ES_ECS","event.dataset":"elasticsearch.server","process.thread.name":"main","log.logger":"org.elasticsearch.bootstrap.Elasticsearch","elasticsearch.node.name":"elasticsearch-node2","elasticsearch.cluster.name":"elasticsearch-cluster"}

This issue arises because SSL is enabled by default on the Elasticsearch cluster. This setting can be explicitly disabled by setting an environment variable xpack.security.enabled=false in each of the node containers in the docker compose file.

version: '3'

services:

elasticsearch-node1:

- xpack.security.enabled=false # additional configuration to disable security by default for local development

elasticsearch-node2:

- xpack.security.enabled=false # additional configuration to disable security by default for local development

A completely updated compose file can be found in the Sloth Summary of this post.

Additional SSL Connectivity Issues From ElasticSearch Docker Compose

Now you’re able to run the two Elasticsearch data nodes and connect to them with an external tool such as Multi ElasticSearch Head Chrome Extension. However, if you try to use Kibana by navigating to http://localhost:5601 and try to configure the cluster you’ll hit another problem.

kibana | [2023-07-11T06:15:12.065+00:00][ERROR][plugins.interactiveSetup.elasticsearch] Unable to connect to host "https://localhost:9200": connect ECONNREFUSED 127.0.0.1:9200

This is caused because Kibana has not yet had SSL disabled.

Adjusting the Kibana configuration section to take a list of environment variables and specifying the xpack.security.enabled=false

kibana:

image: docker.elastic.co/kibana/kibana:8.8.2

container_name: kibana

ports:

- 5601:5601

expose:

- '5601'

environment:

- 'ES_HOSTS=["http://elasticsearch-node1:9200","http://elasticsearch-node2:9200"]'

- 'xpack.security.enabled=false' # disables security plugin

We will touch on Kibana in a future tutorial article. But for now you can think of it as another tool that lets you write queries against your cluster.

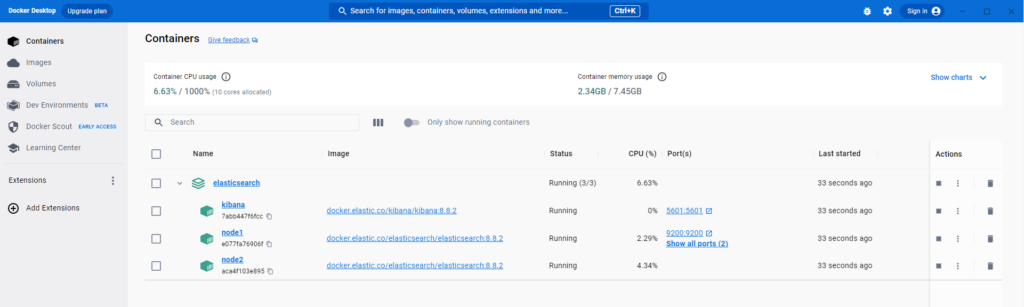

Now you’re up and running with Elasticsearch! If you open the Docker Dashboard, you should see your compose file running with the two data nodes and Kibana containers.

Sloth Summary

In summary, to get Elasticsearch running in Docker on Windows:

- Make a docker compose file that has security disabled for each node and Kibana

- Define a PowerShell script to:

- Increase WSL memory limits

- Run docker compose up

Happy open searching!

Docker ElasticSearch Script

The following script can be used to run your Elasticsearch Docker compose file of choice below. Copy this and save it into a .ps1 file to run from PowerShell.

wsl -d docker-desktop sh -c "sysctl -w vm.max_map_count=262144" docker-compose up

Multi Data Node ElasticSearch Docker Compose File with Kibana

Copy the following into a file called docker-compose.yml within the same directory as the above PowerShell script to run 3 containers:

- ElasticSearch Data Node 1

- ElasticSearch Data Node 2

- Kibana

version: '3'

services:

elasticsearch-node1:

image: docker.elastic.co/elasticsearch/elasticsearch:8.8.2

container_name: elasticsearch-node1

environment:

- cluster.name=elasticsearch-cluster

- node.name=elasticsearch-node1

- discovery.seed_hosts=elasticsearch-node1,elasticsearch-node2

- cluster.initial_master_nodes=elasticsearch-node1,elasticsearch-node2

- bootstrap.memory_lock=true # along with the memlock settings below, disables swapping

- 'ES_JAVA_OPTS=-Xms512m -Xmx512m' # minimum and maximum Java heap size, recommend setting both to 50% of system RAM

- xpack.security.enabled=false # additional configuration to disable security by default for local development

ulimits:

memlock:

soft: -1

hard: -1

nofile:

soft: 65536 # maximum number of open files for the elasticsearch user, set to at least 65536 on modern systems

hard: 65536

volumes:

- elasticsearch-data1:/usr/share/elasticsearch/data

ports:

- 9200:9200

- 9600:9600 # required for Performance Analyzer

networks:

- elasticsearch-net

elasticsearch-node2:

image: docker.elastic.co/elasticsearch/elasticsearch:8.8.2

container_name: elasticsearch-node2

environment:

- cluster.name=elasticsearch-cluster

- node.name=elasticsearch-node2

- discovery.seed_hosts=elasticsearch-node1,elasticsearch-node2

- cluster.initial_master_nodes=elasticsearch-node1,elasticsearch-node2

- bootstrap.memory_lock=true

- 'ES_JAVA_OPTS=-Xms512m -Xmx512m'

- xpack.security.enabled=false # additional configuration to disable security by default for local development

ulimits:

memlock:

soft: -1

hard: -1

nofile:

soft: 65536

hard: 65536

volumes:

- elasticsearch-data2:/usr/share/elasticsearch/data

networks:

- elasticsearch-net

kibana:

image: docker.elastic.co/kibana/kibana:8.8.2

container_name: kibana

ports:

- 5601:5601

expose:

- '5601'

environment:

- 'ES_HOSTS=["http://elasticsearch-node1:9200","http://elasticsearch-node2:9200"]'

- 'xpack.security.enabled=false' # disables security plugin

networks:

- elasticsearch-net

volumes:

elasticsearch-data1:

elasticsearch-data2:

networks:

elasticsearch-net:

Single Data Node ElasticSearch Docker Compose File with Kibana

Copy the following into a file called docker-compose.yml within the same directory as the above PowerShell script to run 2 containers:

- ElasticSearch Data Node 1

- ElasticSearch Dashboards

version: '3'

services:

elasticsearch-node1:

image: docker.elastic.co/elasticsearch/elasticsearch:8.8.2

container_name: elasticsearch-node1

environment:

- cluster.name=elasticsearch-cluster

- node.name=elasticsearch-node1

- discovery.seed_hosts=elasticsearch-node1

- cluster.initial_master_nodes=elasticsearch-node1

- bootstrap.memory_lock=true # along with the memlock settings below, disables swapping

- 'ES_JAVA_OPTS=-Xms512m -Xmx512m' # minimum and maximum Java heap size, recommend setting both to 50% of system RAM

- xpack.security.enabled=false # additional configuration to disable security by default for local development

ulimits:

memlock:

soft: -1

hard: -1

nofile:

soft: 65536 # maximum number of open files for the elasticsearch user, set to at least 65536 on modern systems

hard: 65536

volumes:

- elasticsearch-data1:/usr/share/elasticsearch/data

ports:

- 9200:9200

- 9600:9600 # required for Performance Analyzer

networks:

- elasticsearch-net

kibana:

image: docker.elastic.co/kibana/kibana:8.8.2

container_name: kibana

ports:

- 5601:5601

expose:

- '5601'

environment:

- 'ES_HOSTS=["http://elasticsearch-node1:9200"]'

- 'xpack.security.enabled=false' # disables security plugin

networks:

- elasticsearch-net

volumes:

elasticsearch-data1:

networks:

elasticsearch-net: