Programming directly against a C# concrete class is one of the easiest ways to degrade the unit testability of your code, as they introduce overlapping test cases that exponentially increase the number of unit tests required for full coverage. This article will cover simple steps for implementing concepts of C# abstraction to simplify your codebase and dramatically increase your solution’s unit testability.

Remember to check out the Code Sloth Code Samples page for GitHub links to the repository of sample code!

Today’s article contains assumed knowledge about programming concepts such as classes, interfaces, polymorphism and mocking. Getting back into .Net? Check out our post on getting started with .Net 6 here to resume your .Net journey!

Recap

In the last article we learned about sticky loops and the ultimate refactoring technique to avoid their perilous impact on writing unit tests. While this strategy made our code more testable, it unfortunately didn’t provide a huge impact against the many other issues of our bad code.

As we started to write our first unit test on the RocketLaunchingLogic class, we found ourselves drowning in test data setup. 9 different classes needed to be initialised in order to satisfy the constructor and this was just the beginning of our testing woes.

A wise Code Sloth should have a healthy diagnosis of gamophobia (fear of commitment) … when programming against concrete types that is. Today’s article will teach you how to start nurturing your very own diagnosis by not only demonstrating the drawbacks of committing to concrete implementations, but highlighting how unit testing becomes a breeze when we make a mock-ery of complexity!

Problem 1: Test Setup

The first problem of programming against concrete dependencies is that they complicate test setup. As we saw in the previous article, we needed to setup many levels of nested dependencies before we could even make a RocketLaunchingLogic object.

As a test suite grows over time this verbose setup process encourages engineers to (with good intentions) start to write helper methods to setup these dependencies.

/// <summary>

/// An example of a setup method that may be engineered to comabt the complex test data setup. This is a code smell and sould be avoided!

/// </summary>

private RocketLaunchingLogic MakeRocketLaunchingLogicInitialImplementation()

{

var thrustCalculator = new ThrustCalculator();

var pretendDatabaseClient = new PretendDatabaseClient(

"connection string",

TimeSpan.FromSeconds(10),

TimeSpan.FromSeconds(60),

3,

5

);

var loggerFactory = LoggerFactory.Create((loggingBuilder) => { });

var rocketDatabaseRetrieverLogger = LoggerFactoryExtensions.CreateLogger<RocketDatabaseRetriever>(loggerFactory);

var rocketDatabaseRetriever = new RocketDatabaseRetriever(pretendDatabaseClient, rocketDatabaseRetrieverLogger);

var rocketQueuePoller = new RocketQueuePoller(1, 2, "dependency info");

var httpClient = new HttpClient();

var rocketLaunchingService = new RocketLaunchingService(httpClient);

var rocketLauncherLogger = LoggerFactoryExtensions.CreateLogger<RocketLauncher>(loggerFactory);

var rocketLaunchingLogic = new RocketLaunchingLogic(thrustCalculator, rocketDatabaseRetriever, rocketQueuePoller, rocketLaunchingService, rocketLauncherLogger);

return rocketLaunchingLogic;

}

At some point in the future this method will likely evolve to become parameterised. This may be because a new testing flow is not supported through the existing setup that was written to satisfy what will likely be labelled as “legacy functionality”.

A well meaning engineer will then add some parameters onto the method to help fix their setup problem. If the test suite is not yet very large they’ll likely also maintain the existing tests to provide the required data:

/// <summary>

/// As the test suite grows over time, this helper method will likely become parameterised. Perhaps it may make sense at first.

/// </summary>

private RocketLaunchingLogic MakeRocketLaunchingLogicSecondIteration(string connectionString, int durationSeconds)

{

var thrustCalculator = new ThrustCalculator();

var pretendDatabaseClient = new PretendDatabaseClient(

connectionString,

TimeSpan.FromSeconds(durationSeconds),

TimeSpan.FromSeconds(60),

3,

5

);

var loggerFactory = LoggerFactory.Create((loggingBuilder) => { });

var rocketDatabaseRetrieverLogger = LoggerFactoryExtensions.CreateLogger<RocketDatabaseRetriever>(loggerFactory);

var rocketDatabaseRetriever = new RocketDatabaseRetriever(pretendDatabaseClient, rocketDatabaseRetrieverLogger);

var rocketQueuePoller = new RocketQueuePoller(1, 2, "dependency info");

var httpClient = new HttpClient();

var rocketLaunchingService = new RocketLaunchingService(httpClient);

var rocketLauncherLogger = LoggerFactoryExtensions.CreateLogger<RocketLauncher>(loggerFactory);

var rocketLaunchingLogic = new RocketLaunchingLogic(thrustCalculator, rocketDatabaseRetriever, rocketQueuePoller, rocketLaunchingService, rocketLauncherLogger);

return rocketLaunchingLogic;

}

As the logic of the application grows over time, more tests are added and our function enters the beginning of its final terrible form, from which it will never recover; nullable parameters.

A developer thinks to themselves “oh dear, we have a lot of tests using this method at the moment and I don’t want to have to update them all. I’ll just add a nullable parameter here and fall back to the existing value so that I can keep my change as small as possible”:

/// <summary>

/// There we have it, the nullable parameters begin, logic creeps into this method and before we know it nobdy knows what this data means, what this method does, or how it impacts a given test case

/// </summary>

private RocketLaunchingLogic MakeRocketLaunchingLogicThirdIteration(string connectionString, int durationSeconds, string? dependencyInfo)

{

var thrustCalculator = new ThrustCalculator();

var pretendDatabaseClient = new PretendDatabaseClient(

connectionString,

TimeSpan.FromSeconds(durationSeconds),

TimeSpan.FromSeconds(60),

3,

5

);

var loggerFactory = LoggerFactory.Create((loggingBuilder) => { });

var rocketDatabaseRetrieverLogger = LoggerFactoryExtensions.CreateLogger<RocketDatabaseRetriever>(loggerFactory);

var rocketDatabaseRetriever = new RocketDatabaseRetriever(pretendDatabaseClient, rocketDatabaseRetrieverLogger);

var rocketQueuePoller = new RocketQueuePoller(1, 2, dependencyInfo ?? "dependency info");

var httpClient = new HttpClient();

var rocketLaunchingService = new RocketLaunchingService(httpClient);

var rocketLauncherLogger = LoggerFactoryExtensions.CreateLogger<RocketLauncher>(loggerFactory);

var rocketLaunchingLogic = new RocketLaunchingLogic(thrustCalculator, rocketDatabaseRetriever, rocketQueuePoller, rocketLaunchingService, rocketLauncherLogger);

return rocketLaunchingLogic;

}

This function will either continue to explode with nullable parameters over time, or begin to take many different forms as it is copy pastaed over and over; each version of the method becoming as indistinguishable in intent from the last. It is typically at this point that all hope is lost when it comes to writing new tests for this area of the application.

The urge to write shared test setup methods is a code smell for multiple reasons. Is it because you have too many dependencies to setup? We’ll cover this in a future article. Is it because you’re programming against too many concrete types? Keep reading.

Problem 2: Are We Even Unit Testing Anymore?

Once you manage to wrangle your dependencies together and inject them into your class under test another problem surfaces. We’re likely no longer in the realm of unit testing.

Looking at our RocketLaunchingService as an example, we can see that this class aggregates a HttpClient. This client will literally make a web request on our behalf when invoked, which will exit the process space of our tests.

By definition, a test that leaves the process space of the code-under-test is an integration test; something that we are not trying to author.

Problem 3: Overlapping Test Cases

The third and final problem of programming against concrete types might be a little less obvious.

Have you ever started writing tests and found that you are effectively writing the same test logic over and over again in different classes? Only, each time you come across the use case it is slightly twisted and more difficult to simulate?

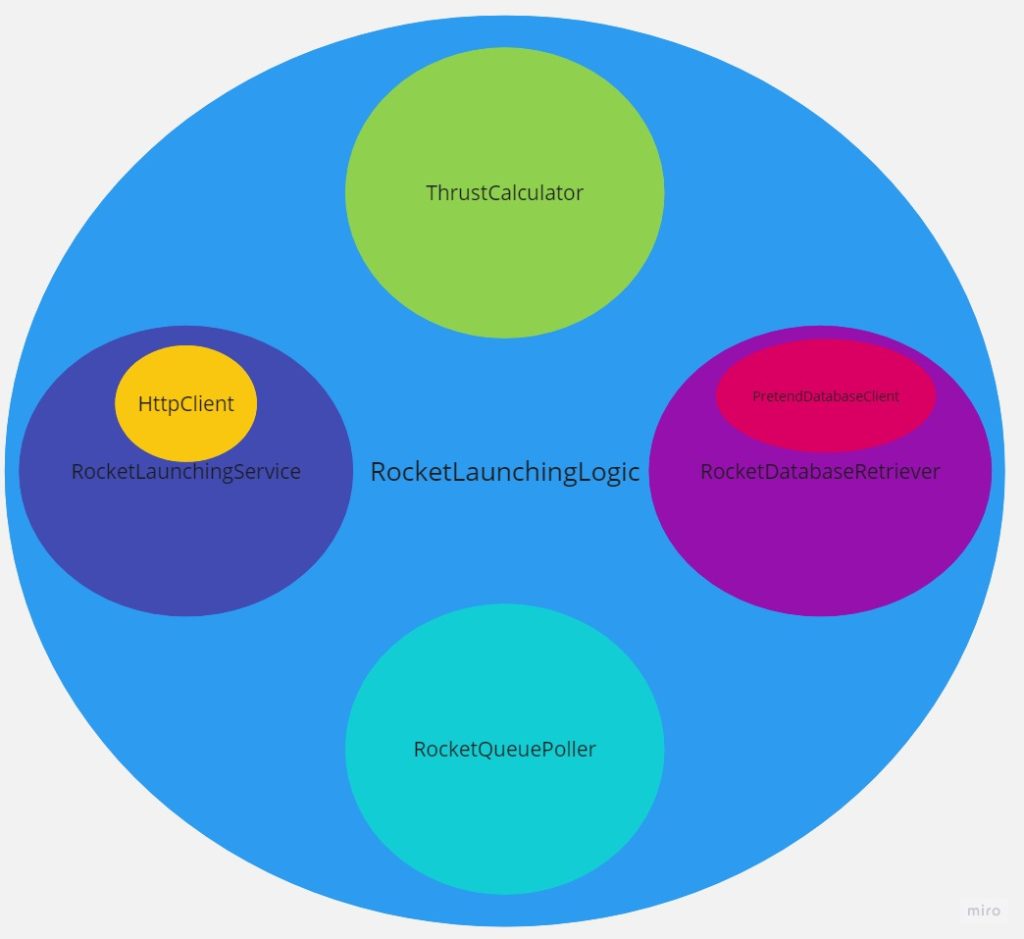

The diagram below demonstrates the logical dependencies between our current classes:

Each circle in this diagram represents a set of functionality belonging to a class. Circles that exist within other circles represent a logical dependency. Here we can see that the RocketLaunchingLogic class depends on the logic of every other class, because they all sit within its dependency circle.

Let’s laser focus onto a specific set of overlapping logical dependencies:

RocketLaunchingLogicdepends onRocketDatabaseRetrieverwhich depends onPretendDatabaseClient

This means that we may be able to easily write a series of unit tests against PretendDatabaseClient. Afterall, it has no dependencies to orchestrate.

However, when we write tests for the RocketDatabaseRetriever we then find ourselves needing to replicate the outputs that we observed when testing the PretendDatabaseClient. This is because the outputs of the PretendDatabaseClient may impact the behaviour of the RocketDatabaseRetriever and our unit tests will document and assert those outcomes.

However, this may be slightly more difficult than before, as we need to orchestrate both the PretendDatabaseClient and the RocketDatabaseRetriever together. Doing this causes many permutations and combinations, and the number of test cases for our RocketDatabaseRetriever explodes in comparison to the PretendDatabaseClient.

Finally, when we start to write tests for RocketLaunchingLogic we also need to re-test RocketDatabaseRetriever logic and the PretendDatabaseClient logic. That’s if it is even possible to simulate each of their potential outputs from a higher level of invocation. The total number of tests required for this class then explodes exponentially when compared to the prior two classes.

When programming against concrete implementations, your unit tests will need to have an understanding of the functionality of all classes within a logical dependency tree. If your tree is 100 levels deep, you will need to orchestrate 100 different sets of logic in order to explore the different paths through your primary class under test. Good luck with that!

Pruning our Problematic Dependency Trees

Programming against a concrete implementation binds us to one definition of code for the rest of time. Or until we refactor it to be better, that is.

We can avoid programming against concrete implementations by using the dependency inversion principle. This involves programming against interfaces, instead of concrete implementations.

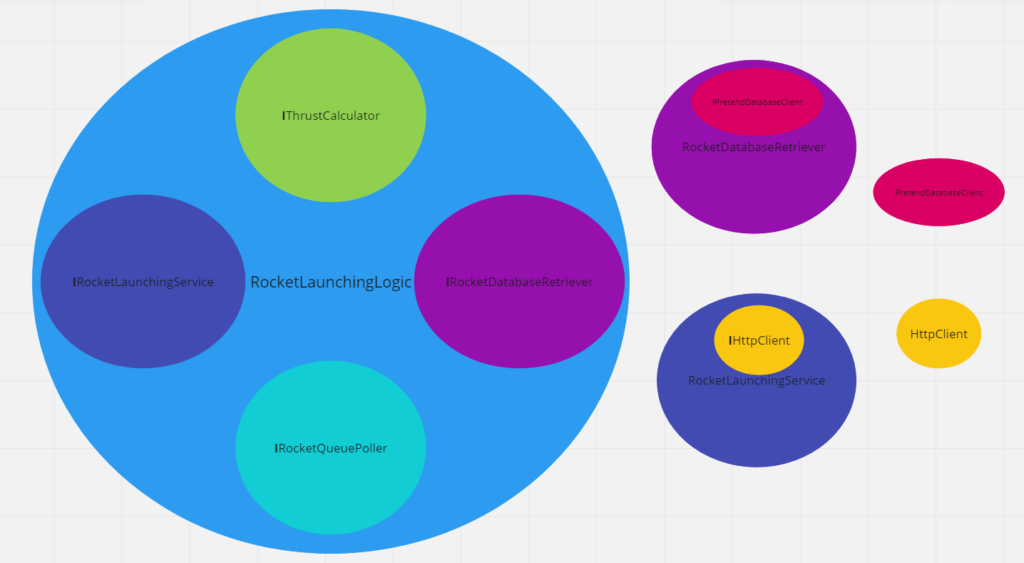

Let’s revise our diagram from above to show how programming against interfaces impacts our logical dependencies.

Here we can see that our RocketLaunchingLogic now depends on interfaces rather than concrete classes; denoted by a name starting with I. Our RocketLaunchingService and RocketDatabaseRetriever also now depend on interfaces.

Do you notice that none of the interface circles contain logical dependencies? This is because interfaces add a layer of abstraction (mystery) as to what happens beneath them.

When you introduce an abstraction, you prune a dependency tree at the point that the abstraction is introduced.

Let’s laser focus again onto the overlapping logical dependencies from earlier:

RocketLaunchingLogicnow depends onIRocketDatabaseRetriever

RocketDatabaseRetrievernow depends onIPretendDatabaseClient

PretendDatabaseClientcontinues to depend on nothing

Do you see how none of these dependency trees is greater than one level deep? This is the power of abstraction!

When writing unit tests for the RocketLaunchingLogic class we no longer need to worry about re-testing RocketDatabaseRetriever and PretendDatabaseClient, because the RocketLaunchingLogic class does not know anything about them!

Levelling up the Lift and Shift, Vertically!

In the last article we lifted and shifted our code from one concrete class into another concrete class. Let’s level it up into an even more useful form!

- Firstly, lift and shift your required code into a new class

- Generate an interface for the new class (and implement it by the class if not using Visual Studio’s extract interface feature)

- Aggregate the new interface in the consumer of the original class (which code was lifted from) as a member variable, with a new constructor parameter to allow for injection.

We’ve now made our old class exponentially more testable! This injected interface is a promise that any class which implements it can provide the functionality described by its methods, otherwise known as the strategy pattern. While using different algorithms at runtime is not our intent this pattern lets us, at testing time, inject a new mock algorithm for functionality we do not want to re-test.

This approach to the lift and shift shall henceforth be known as the vertical lift and shift!

Putting Polymorphism into Practice

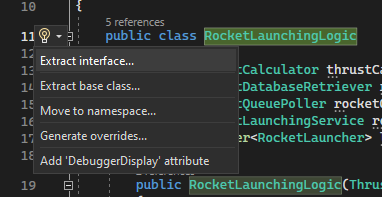

The goal of a pragmatic software engineer should be to type as few characters as possible when programming. Don’t go typing out every new interface that you require when they can be automatically generated for you!

Right click the class that you need an interface for

Select Quick Actions and Refactorings... or use the keyboard shortcut Ctrl + .

Then select Extract interface...

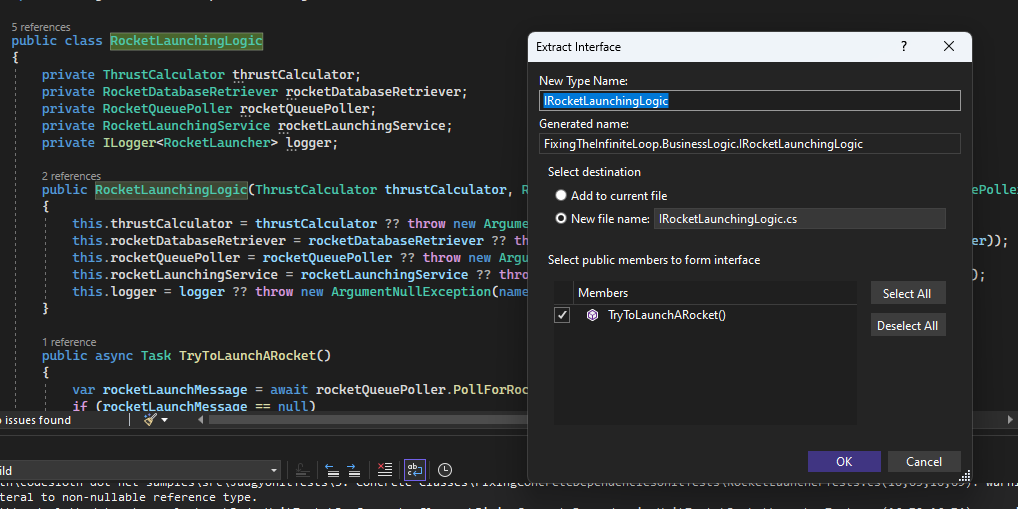

Accept the default options and voila! You’ve got a brand new interface, in its own file that is implemented by your class.

Let’s take a look at the before and after:

The interface is nothing more than an expression of the available functionality. IRocketLaunchingLogic tells us that it can TryToLaunchARocket. How? Who knows!

namespace FixingConcreteDependencies.BusinessLogic

{

public interface IRocketLaunchingLogic

{

Task TryToLaunchARocket();

}

}

Finally this interface is consumed by our RocketLauncher:

using FixingConcreteDependencies.BusinessLogic;

using Microsoft.Extensions.Hosting;

namespace FixingConcreteDependencies

{

public class RocketLauncher : BackgroundService

{

private IRocketLaunchingLogic rocketLaunchingLogic;

public RocketLauncher(IRocketLaunchingLogic rocketLaunchingLogic)

{

this.rocketLaunchingLogic = rocketLaunchingLogic ?? throw new ArgumentNullException(nameof(rocketLaunchingLogic));

}

protected override async Task ExecuteAsync(CancellationToken stoppingToken)

{

while (!stoppingToken.IsCancellationRequested)

{

await rocketLaunchingLogic.TryToLaunchARocket();

}

}

}

}

Nothing really changes in how we write our code. Only what we write our code with.

Once each class has a corresponding interface we are ready to test again.

Making a Mockery of Complexity

Unit testing starts to look a little different when we are working with interfaces. While we could actually create real concrete implementations of the interface dependencies of a class during unit testing, we don’t. That would defeat the purpose of having just created the interfaces!

Instead we provide mock instantiations of interfaces to the constructor of our class under test. This allows the mocked objects return fake data when their methods are called, to influence the flow of logic through our method under test.

Fake data can come in any shape or form. If a mocked method returns an int, we could have our mock return the value 1 or -9,999,999. It’s up to us to decide what data will steer the flow of logic in our desired path through our function under test. Sometimes we don’t even care!

There are many different NuGet packages of mocking libraries. In this series we will be using Moq.

Let’s take a look at our RocketLauncherTests once more. Remember our existing test?

[Fact]

public async Task RocketLauncher_StopsLaunchingRockets_WhenCancellationTokenIsSignaled()

{

var rocketLaunchingLogic = new RocketLaunchingLogic(null, null, null, null, null);

var rocketLauncher = new RocketLauncher(rocketLaunchingLogic);

var cancellationTokenSource = new CancellationTokenSource();

var cancellationToken = cancellationTokenSource.Token;

cancellationTokenSource.Cancel();

await rocketLauncher.StartAsync(cancellationToken);

// A very silly assertion to confirm that we exited from StartAsync

true.Should().BeTrue();

}

This test can now be written properly:

/// <summary>

/// We can now objectively assert that we terminate the loop after the token is singalled

/// </summary>

[Fact]

public async Task RocketLauncher_StopsLaunchingRockets_WhenCancellationTokenIsSignaled()

{

var rocketLaunchingLogicMock = new Mock<IRocketLaunchingLogic>();

var rocketLauncher = new RocketLauncher(rocketLaunchingLogicMock.Object);

var cancellationTokenSource = new CancellationTokenSource();

var cancellationToken = cancellationTokenSource.Token;

cancellationTokenSource.CancelAfter(1000);

await rocketLauncher.StartAsync(cancellationToken);

// We still lack determinism through the usage of cancellation token, but can assert against something more concrete

rocketLaunchingLogicMock.Verify(method => method.TryToLaunchARocket(), Times.AtLeastOnce());

}

While we still lack determinism around how many launches were made before the cancellation token was signaled, we have overcome two obstacles:

- We can now write a test that does what it says! The last test never even tried to call

TryToLaunchARocket… - We can assert that our interface was invoked. Previously this would have been impossible

Programming against this interface has also unlocked two additional test cases.

Firstly, we can create a test case for the specific flow that our last test tried to achieve:

/// <summary>

/// The same test from before, except this time we have an objective assertion

/// </summary>

[Fact]

public async Task RocketLauncher_DoesNotLaunchARocket_WhenGivenACancelledToken()

{

var rocketLaunchingLogicMock = new Mock<IRocketLaunchingLogic>();

var rocketLauncher = new RocketLauncher(rocketLaunchingLogicMock.Object);

var cancellationTokenSource = new CancellationTokenSource();

var cancellationToken = cancellationTokenSource.Token;

cancellationTokenSource.Cancel();

await rocketLauncher.StartAsync(cancellationToken);

// We should never launch a rocket if our tokenis already cancelled

rocketLaunchingLogicMock.Verify(method => method.TryToLaunchARocket(), Times.Never());

}

This test clearly documents that if our cancellation token is cancelled when we start our rocket launcher, that we never invoke TryToLaunchARocket. This is actually what our original attempt was doing.

Finally, we can test one more flow. This type of flow is often forgotten, especially for void or Task return types:

/// <summary>

/// One testing flow that is often overlooked is: what happens when an exception is thrown?

/// </summary>

[Fact]

public async Task RocketLauncher_PropagatesUnhandledException_WhenTheyAreThrownByRocketLaunchingLogic()

{

var rocketLaunchingLogicMock = new Mock<IRocketLaunchingLogic>();

// When the rocket launching logic interface is invoked, our mock will throw an exception

rocketLaunchingLogicMock

.Setup(method => method.TryToLaunchARocket())

.ThrowsAsync(new Exception("A fake exception to be thrown when the mock is called"));

var rocketLauncher = new RocketLauncher(rocketLaunchingLogicMock.Object);

var cancellationTokenSource = new CancellationTokenSource();

// We do not need to specify cancellation conditions, as the exception is expected to break us from the loop

var cancellationToken = cancellationTokenSource.Token;

Func<Task> act = () => rocketLauncher.StartAsync(cancellationToken);

// Fluent assertions catches the exception which bubbles out of the RocketLauncher

await act.Should().ThrowAsync<Exception>();

// We can assert with determinism that this flow only calls TryToLaunchRocket once

rocketLaunchingLogicMock.Verify(method => method.TryToLaunchARocket(), Times.Once());

}

That’s right! void and Task functions may not return a result, however, they may still throw exceptions! It is important to understand how your code will respond in these flows.

In the example above, we let the exception go unhandled which would cause it to “bubble up” the call stack and likely crash the running application. Would this behaviour be desirable in your productionised application?

Another Attempt to Test Launch Logic

Now that our RocketLaunchingLogic class aggregates interfaces instead of concrete classes, we’re ready to start writing tests.

public async Task TryToLaunchARocket()

{

var rocketLaunchMessage = await rocketQueuePoller.PollForRocketNeedingLaunch();

if (rocketLaunchMessage == null)

{

await Task.Delay(TimeSpan.FromSeconds(5));

return;

}

...

}

Let’s start with the first flow that’s highlighted above. We will begin by writing a test to cover what happens when we don’t find a rocket to launch:

/// <summary>

/// Test setup has become much simpler for our RocketLaunchingLogic class now!

/// </summary>

public class RocketLaunchingLogicTests

{

[Fact]

public async Task RocketLaunchingLogic_TriesToLaunchARocketAgain_IfItDoesNotInitiallyFindOne()

{

var thrustCalculatorMock = new Mock<IThrustCalculator>();

var rocketDatabaseRetrieverMock = new Mock<IRocketDatabaseRetriever>();

var rocketQueuePollerMock = new Mock<IRocketQueuePoller>();

var rocketLaunchingServiceMock = new Mock<IRocketLaunchingService>();

var loggerMock = new Mock<ILogger<RocketLaunchingLogic>>();

var rocketLaunchingLogic = new RocketLaunchingLogic(

thrustCalculatorMock.Object,

rocketDatabaseRetrieverMock.Object,

rocketQueuePollerMock.Object,

rocketLaunchingServiceMock.Object,

loggerMock.Object

);

// Oh no! This test will literally take 5 or more seconds to execute due to the async Task.Delay that it uses

}

}

Wait a second (or 5!). There is no way that waiting 5 seconds for this test to run is acceptable! Clearly this time provider is not going to play nicely with our tests. Perhaps we can make some progress writing a test for a simpler class?

Let’s try to write some tests for the ThrustCalculator instead, as it has no dependencies. Should be pretty easy, right?

/// <summary>

/// While the thrust calculator itself has no dependencies, we can't test its functionality with determinism

/// </summary>

public class ThrustCalculatorTests

{

[Theory]

[InlineData(1,1,1,1,1,1, 5, "thrust is the sum of all values multiplied by 1 as this is the number of sloths to launch")]

public void ThrustCalculator_ReturnsExpectedValues(int thrustValue1, int thrustValue2, int thrustValue3, int thrustValue4, int thrustValue5, int numberOfSloths, int expectedThrustValue, string exaplanation)

{

var thrustCalculator = new ThrustCalculator();

var result = thrustCalculator.CalculateThrust(

thrustValue1,

thrustValue2,

thrustValue3,

thrustValue4,

thrustValue5,

numberOfSloths

);

// Oh no! This test sometimes fails. But why???? Perhaps it is to do with that pesky DateTime.Now?

result.Should().Be(expectedThrustValue);

}

}

Oh wow… Despite the ThrustCalculator having zero dependencies to orchestrate, we still can’t write reliable tests for it.

public int CalculateThrust(int input1, int input2, int input3, int input4, int input5, int numberOfSloths)

{

var result = (input1 + input2 + input3 + input4 + input5) * numberOfSloths;

if (DateTime.Now.Hour <= 12)

{

return result;

} else

{

return result + 10;

}

}

Taking a look at the function under test, it seems like the call to DateTime.Now is working against us at certain times of the day.

Luckily for us the next article will tackle the problem of programming against concrete time providers, so that we can unblock writing these unit tests!

Sloth Summary

We’ve covered a lot of ground today!

Takeaway: Concrete Dependencies Create Overlapping Test Cases

We learned that programming against concrete types makes test setup more difficult, increases and duplicates the number of test cases we need to write and the difficulty of actually writing them. Furthermore if we program against concrete types we also increase the risk of actually writing integration tests, instead of unit tests, which come with a whole bunch of additional complexity!

Takeaway: Vertical Lift and Shift Employs C# Abstraction

We also levelled up the lift and shift by hiding our new class behind a mysterious interface, which we made Visual Studio generate for us. This allowed us to prune the logical dependency tree of the RocketLauncher by not committing ourselves to a specific implementation of RocketLaunchingLogic. Finally the code was simple enough to write some tests!

While writing these tests we explored how mocks allow us to simulate different flows through the class under test and how they can be used to fake outputs from our dependencies, such as testing the often forgotten exceptional flow(s) of a method.

Takeaway: Concrete Dependencies Can Exist Beyond Constructor Injection

Despite our test setup becoming much simpler for our RocketLaunchingLogic class, we’re still not ready to write a test for it. This is because we’d have to wait 5 seconds for the test to run, and that’s just not acceptable for a unit test! We tried to write a test for our ThrustCalculator, because it seemed like a simple class given that it had no dependencies. Despite this, we were once again thwarted by a time provider that destroyed our ability to make the test deterministic.

In the next article we will explore time travel, as we solve the problem of concrete time providers and continue to push ahead with simplifying and unit testing our Code Sloth Rocket Launcher.

See you then!

Other Posts in This Series

Check out the other posts in this series!

- [Series] Your .Net Unit Tests Are Judging You: Why You Should Listen to them

- [Test Refactoring Part 1] Introducing The Problem Code

- [Test Refactoring Part 2] Sticky Loops

- [Test Refactoring Part 3] Concrete Dependencies

- [Test Refactoring Part 4] Hidden Concrete Types

- [Test Refactoring Part 5] Dependency Simplification